Integrating the practices¶

The previous chapters looked at what security is, and provided a systematic way to get your software secure enough and to verify that you did this correctly. The next step is to integrate all of this into your project’s workflow.

Making security visible¶

The open source (“bazaar”) development model, as described in the Cathedral and the Bazaar, depends heavily on user involvement, (“eyeballs”) [CATB]. Therefore it makes sense to make security visible:

If your co-developers do not know the security requirements, architectural threats or coding standard, they are less likely to create a secure piece of software.

Reviewers have an easier job deciding where and what to look for.

visible security triggers end-users to actively think about their security wishes.

This seems to have a downside: by giving away your security details, you are making it easier for attackers of your software to find security problems and exploit them. This dilemma however goes back to the days before software even existed: the locksmith Alfred Hobbs pointed out that keeping security vulnerabilities in locks secret did not prevent attackers from exploiting them, as the attackers were “very keen” and “know much more than we can teach them” [WikipediaObscurity].

On the long term, the benefits of being open about your software security outweigh the downsides. In fact, this principle has long been recognized and practiced in developing cryptographic algorithms [Schneier2002].

Yet, some open source projects choose not to reveal security bug reports immediately. Responsible disclosure is the publication of discovered vulnerabilities, but while giving the developers time to address the vulnerabilities [Kadlec]. For example, Mozilla flags security bug reports as “Security-Sensitive” and prevents disclosure of the bug until the security module owner removes the flag. To encourage people to report security bugs, a bug bounty program financially rewards reporters of vulnerabilities [Mozilla]. Be aware however that the black or gray markets pay a lot more for interesting not-yet-discovered vulnerabilities (so-called zero day exploits) [Zerodium]. This is why Apple’s bug bounty program was not very succesful in its first year [iPhoneHacks]. Note that selling exploits may be illegal under some legislations, and because you do not know how the buyers will use your exploit, there may be serious ethical concerns.

While your project may not have the resources to set up a bug bounty program, you can choose to have a private section of your issue tracker for security sensitive issues, and a private source code branch for security fixes. In chapter 6 of the book Producing Open Source Software, Karl Fogel describes some best practices on how to deal with security vulnerability reports [Fogel].

At the core of making security visible is a list of all the threats that we have identified: the TMV list. For every identified threat (or equivalently: security requirement), it lists:

a description

(optional) its impact

a mitigation: how will we deal with this threat?

a verification: how will we check that we did that correctly?

If a threat is not mitigated, then the mitigation column lists the reason (e.g. accepted) and the verification explains this in more detail (e.g. very low likelihood). For example:

Threat |

Mitigation |

Verifcation |

|---|---|---|

XPath injection in search form |

input validation: allow only alphanumeric characters, commas and spaces |

try searching with malicious input, see automated test |

XML bomb when parsing XML configuration file |

We accept the risk |

The configuration file is assumed to be trusted and not under control of an attacker. The installation script should set the right file permissions and the manual should explain to keep them that way. |

Security assumptions¶

We cannot anticipate every environment in which the software is used. Similarly, we cannot always satisfy everyone’s security needs. In such cases, we need to make security assumptions.

For example, if your users have wildly varying security needs, you can choose to make certain security aspects configurable. In this case, you are delegating part of the security responsibility to the users of the software. It is only fair that the users will know how to configure the software’s security settings, therefore the project’s documentation will need to explain this.

If the software’s security depends on the environment, the installation documentation should tell what the user will need to configure in the environment in order to improve the security. Make sure that the default configuration is secure, or at least document very clearly how to secure the software installation. Do not be like Docker, who automatically bypassed the local firewall on Linux, exposing your containers to the outside world [Meyer] [Levefre].

Some threats are very hard to mitigate and you may choose to accept the risk. If you do this, this should be clear to the end-users of the software. After all, their security needs may be very different. If they are aware of such risks, they may choose not to use the software, to mitigate these threats themselves, or perhaps to contribute a solution.

Like medicine, software should come with a manual that tells you how to use it and explains the risks.

When to do what¶

In a commercial development environment, where developer time is available and allocated, you can plan security practices as part of the development process. In an open source project, this is not guaranteed.

An OSS project is typically distributed and communication is asynchronous. This makes it harder to coordinate activities and plan releases. This is not to say that OSS development is unstructured. Like in any other software development process, without structure, a project would quickly experience chaos that negatively impacts the resulting software.

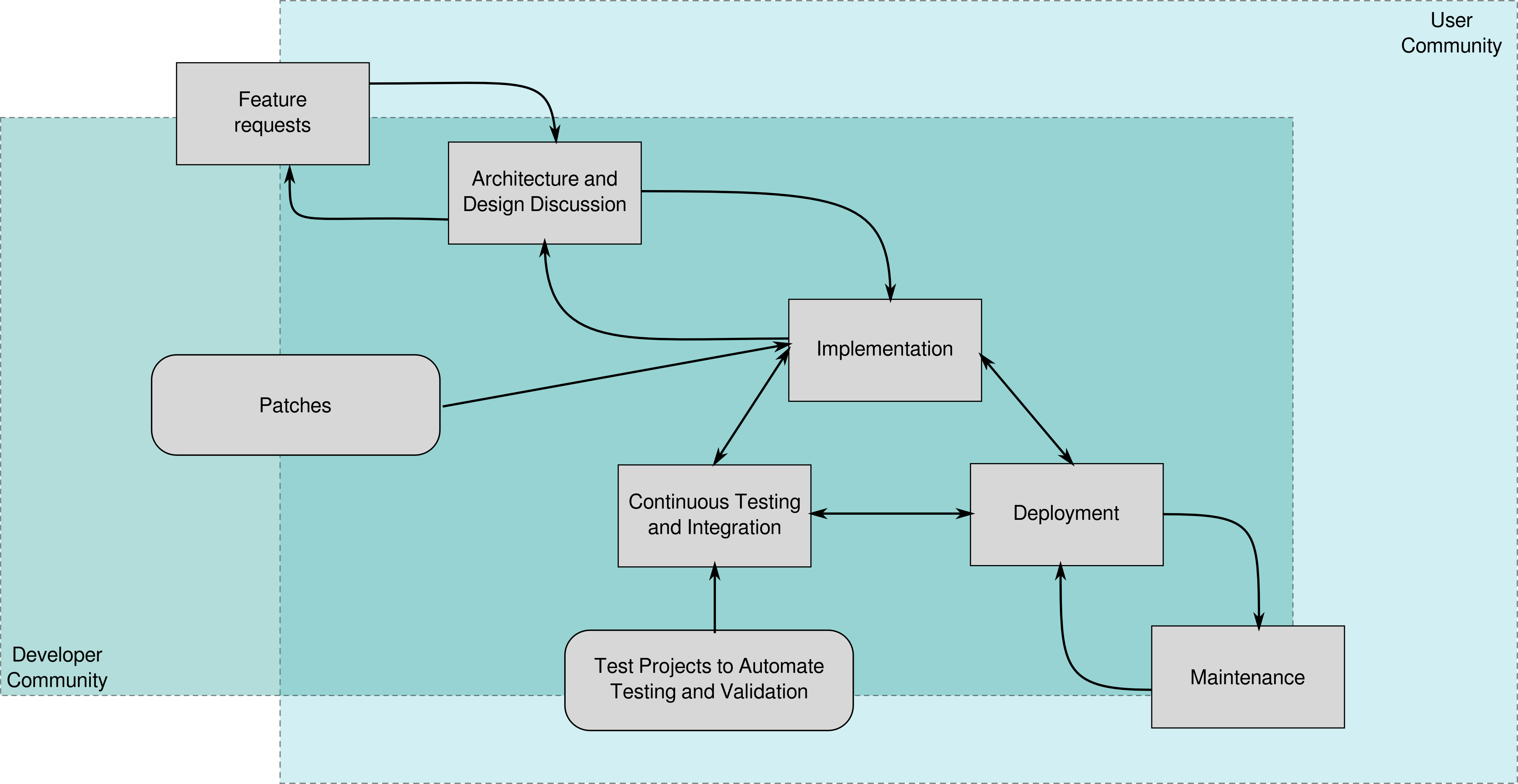

The Linux Foundation describes the open source feature life-cycle, using the feature as a development unit, instead of sprints, milestones or deliverables [LinuxFoundation]. Figure 1 shows this.

Figure 1: The open source feature life-cycle according to [LinuxFoundation].¶

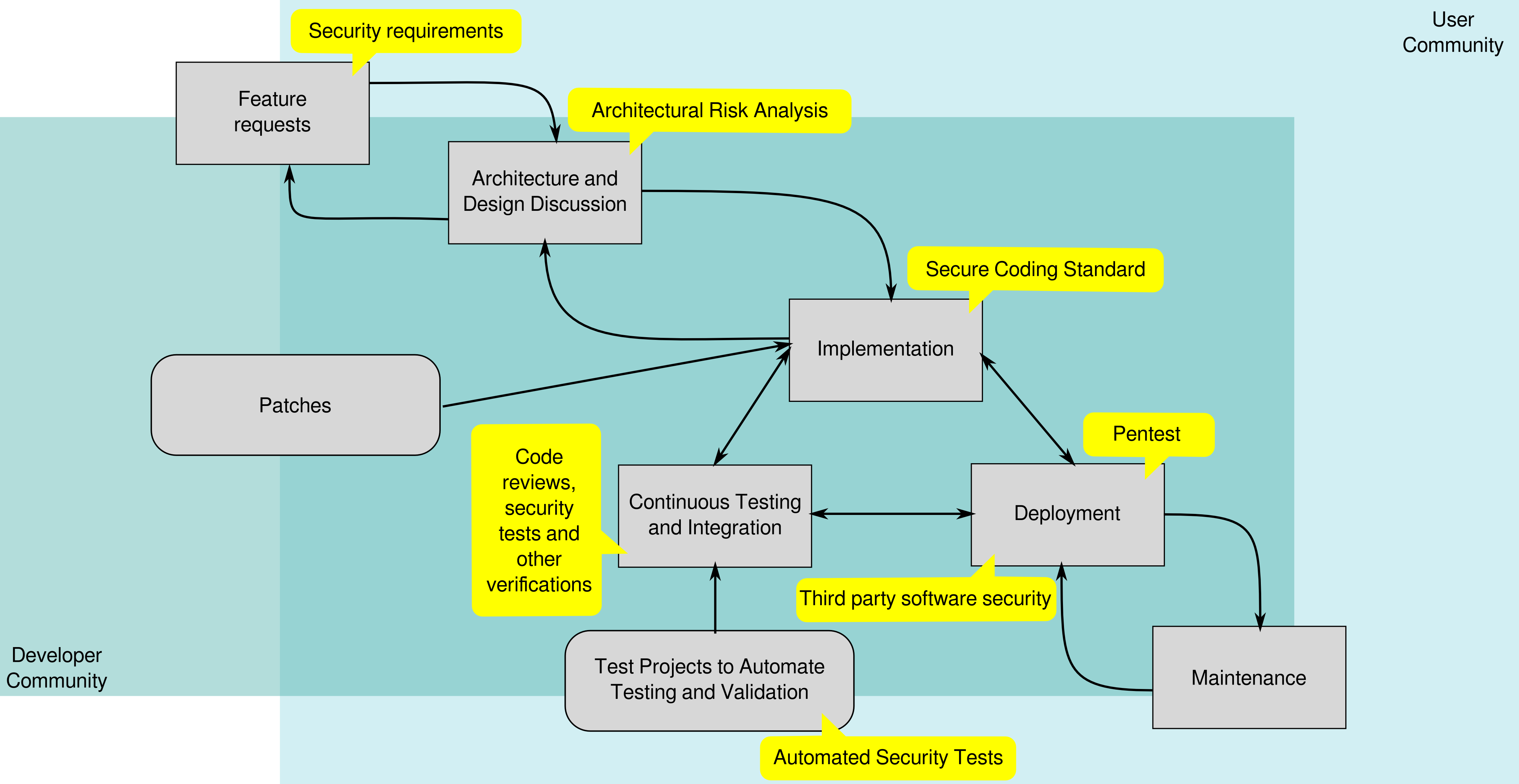

If we refine our model of things that can go wrong during the phases of the SDLC and apply it to this feature life-cycle, we can identify when to apply remedying activities. Figure 2 gives an example, your may choose a different approach.

Figure 2: The open source feature life-cycle with security activities.¶

These activities serve as safety nets, to catch security issues as early as possible. To make sure that the activities are performed, you can establish quality gates in your workflow. The feature can only move to the next development phase if the corresponding security activity has been performed. For example, the architecture and design discussion can not begin 1 unless security requirements are defined. It is usually up to the project maintainers to enforce these quality gates.

Discussions about security¶

Karl Fogel describes some communication pitfalls in his book Producing Open Source Software. These pitfalls are no different when discussing security. Security is often more complicated than one thinks, and not everybody knows all the details. There are risks of misinterpretation and misunderstanding, too. At the same time, many people have a strong (personal) opinion about security and forget to consider the particular security needs or environment restrictions that apply. This often leads to a lot of confusion. Some guidelines for asking smart security related questions could be a good idea. Here are some suggestions:

Make the security context clear: what is the security need and what is the environment? If the context is not clear, find out more details first, before discussing the question.

When claiming something, explain why. Don’t just say that using HTML5 localStorage is insecure, but explain the threats of doing this. Refer to sources or even better, provide a proof of concept that can be tested. Be precise and keep things factual.

Realize that a security solution may be incorrect. Security is complex, and even experts may miss some details occasionally.

Some security needs may be unrealistic. Politely explain why you do not want to deal with this, and offer suggestions for alternative mitigations.

Dealing with security findings¶

The security activities will result in security findings. If (temporary) secrecy is important to your project, make sure that the result of these activities is available to a selected group of team members only, until the finding can no longer do harm.

Security findings can lead to:

new features (e.g. technical mitigations)

changed architecture

changed code

changed secure coding standard

extra tests

improved security activities (e.g. better test methods)

By responding to these findings, we will not only improve the security of the software, but also make the development process more robust.

Finding people¶

One of the big bottlenecks in software security is the lack of skilled people. While today there are plenty of courses to become a certified pentester, and the career perspectives of pentesters are attractive, one would expect that pentesters would be willing to join an open source project to increase their skills. While this sounds like a great idea, be aware however that pentesting by itself is not enough and you will need people with both programming and security skills.

Rather than teaching pentesters how to program, it may be more effective to let developers learn software security skills. Ira Winkler recommends “starting with capable people (even though their skill sets might be tangential) and having them apprentice under skilled people.” [Winkler].

Working with software developers, I notice that simply triggering them to ask the right questions about security generates enough awareness. From there on, it is often a matter of having enough technical knowledge to come up with a solution. Of course they will need to know the details of certain attacks, but most of these are well documented on the internet, for example on the OWASP web site [OWASP].

Lakhani and Wolf researched the big motivators for participating on open source projects. The top motivators are [LakhaniWolf]:

enjoyment-based intrinsic motivation

user need

intellectual stimulation

improving programming skills

Security can trigger or promote these motivators. People with an interest in security testing enjoy finding security issues and therefore might be interested to apply this in your project. Simultaneously, one can be intrinsically motivated to make something that resists as many attacks as possible. There is a strong need for secure products, in fact, as we have mentioned in the introduction, many believe that open source software is more secure [OSSSurvey2017], so why not live up to that and show that this is the case. Secure design can be challenging and requires a thorough analysis, no lack of intellectual stimulation there. Lastly, having learned how to program securely is definitely an improvement of your skills.

External motivators, such as bug bounties, may work, but have some caveats. First of all, it can be costly. Not only do you have to reserve enough money to pay out bug bounties, but you will also have to manage and judge the submissions. The rules must be clear, otherwise there will be discussions about payouts. Many bug bounty programs attract people that will report non-issues, or try to claim a bug bounty for a denial of service attack of your project’s website. Then, bug bounty hunters are not aware of your security requirements and may have a different view on your software’s security. Also, the search is undirected, meaning that many people will try to search for the same kind of bugs, or avoid searching for some bugs. As Traxiom puts it: “Bug bounties are prone to group-think, where often very obvious vulnerabilities are neglected because everyone assumes someone else has already looked for it.” [Traxiom].

Some believe that bug bounties focus too much on rewarding the discovery of bugs, and not the fixing. NCC Group started a fix bounty program, that rewards both finding and fixing bugs [NCCGroup]. While this is a noble initiative, it is also prone to abuse. An attacker could deliberately introduce vulnerabilities by participating in a project, and then later fix them to claim the fix bounty. This could explain why the NCC Group web site does not tell how to participate in the fix bounty program, but perhaps that is just a coincidence.

Getting started with security practices¶

The number of security practices may sound daunting at first. Fortunately, you do not need to execute all practices all the time. Catching security mistakes early is better, but a running program is still more important. As long as mistakes are caught before a release, you have some flexibility. It is up to the project maintainers to find a way that works.

Start small and simple. Doing all practices fully and immediately will certainly disturb the workflow. You will create some technical debt that you must resolve later, but that is unavoidable. Besides, not getting started will be worse than getting started with only a few practices.

In a more mature project, there will be many existing features that will need to be analyzed for security. This can create some extra work. How you will deal with this technical debt, depends on where you expect security problems (in existing code or in new code), how important new features are compared to checking security in old features, and so on.

Reported security issues from the past may indicate what problems need fixing first. For example, if there were multiple buffer overflow vulnerabilities, you may want to look at ways to prevent those in the future. If third party libraries are a problem, look at ways to deal with those as part of the development process. An initial security review, for example via a pentest or code review, may help to identify more problems.

Automation is another promising direction. More and more tools appear that automate much of the security work, many of which this guide mentions. As secure software development practices become more common, the availability of supporting tools will increase. Keep in mind however that tools are not perfect and are not an excuse to stop doing other practices.

Growing the security practices¶

As you are improving your secure SDLC, you may want to compare your process to other processes, or to how you were working last year.

BSIMM¶

BSIMM was one of the first software security maturity models. It observes security practices in various organizations, without any judgment of what practices work or not [BSIMM]. This implies that following the most common practices does not necessarily mean that they are effective 2. The BSIMM document describes many software security practices that you can draw inspiration from. If you fill in the scorecard, you can compare your project with the average score of the participating organizations. However because these organizations are not open source projects, such a comparison may not be very useful.

SAMM¶

OWASP developed SAMM to let an organization build a software security assurance prorgram, rather than compare it with other organizations [SAMM]. As such, SAMM is prescriptive in its guidance, while BSIMM is free of judgment. Divided into four business functions, each having three security practices, the SAMM scoring model lets you rate your practices intro three maturity levels. While according to OWASP, any organization can use SAMM, regardless of their style of development, not all practices may fit equally well into an open source project workflow. Still, the scoring model is very well documented, easy to apply, and describes useful practices.

CII badge¶

The Core Infrastructure Initiative’s badge program is a maturity model focused on open source software projects. It defines project criteria at various levels using RFC style key words such as MUST, SHOULD, and MAY [CIIBadge].

Security is not the only criterion, other aspects such as basic open source development practices, use of change control tools, and attention to quality are part of the maturity model.

You can get a badge through a self-certification via a questionnaire 3. The security criteria in the badge program cover most of what we have discussed in this guide: having secure development knowledge, cryptographic practices, third party code vulnerabilities, and static and dynamic code analysis. There are not explicit criteria for security requirements, threat modelling and architectural risk analysis. The program does mention secure design principles, but while useful, they do not focus on identifying threats in the way that threat modelling does. One of the goals of the badge program was to remain practical and not to burden small projects with heavy criteria, and perhaps doing threat modelling was considered too heavy. The badge program itself however documents their security extensively, from security requirements and architecture to secure development practices. The badge program is a great step towards making security visible, at least for a project’s security practices. I would like to see criteria for threat modelling and making the software product’s security visible, as this guide advocates.

Summary¶

Making security visible is essential to integrating security practices in an open source project. However, there may be reasons to keep information about security vulnerabilities private for a while, until the issue is resolved. A TMV list keeps track of security requirements and expected threats, how they will be fixed, and how they will be verified. This list gives a quick overview of the software’s security status. If you make security configurable, your software can securely run in environments with different security needs. The project’s documentation should clearly describe how to do this, preferably in a security chapter. Also document security issues that you can or will not resolve. Execute security practices along with the workflow of your project. Implement quality gates to ensure their execution. Discussions about security have their own problems that can quickly cause confusion. Discussion guidelines can help to keep clarity. Adequately responding to security findings will improve your software and your process. It can be difficult to find skilled software security people in your project: the combination of security and programming expertise is still rare. It is easier to let software developers learn security than to let security people learn how to program, therefore it makes sense to make all contributors aware of security and the security practices in your project. External motivators such as bug bounties do not work as well as internal motivators, such the opportunity to learn and to create something useful. Start small and simple with the security practices. Existing code will likely have some technical debt. Look at what has gone wrong in the past or do a quick security scan or test of your code. From there, slowly get better. Several maturity models can help you evaluate where you are and guide you towards a more secure development process.

- 1

or at least, cannot be formally approved/ signed off. Quality gates should not slow down progress, but they should prevent you from rushing off in the wrong direction.

- 2

after all, just because many people run a certain closed source operating system, does not mean that it works well.

- 3

of course, one can cheat with self-certification, but since everybody can verify the criteria in an open source project, there is an incentive not to do this.